Moscow knows what it is doing and Washington should take note.

The West got a fresh jolt from Moscow last week. That’s when Russian defense minister Sergey Shoygu announced that Russian armed forces would hold a military exercise in September, called “Vostok-18” [East-18], on a scale not seen since the early 1980s. If there was any doubt that Russia sees itself in a “New Cold War,” Shoygu’s direct reference to the massive “Zapad” [West] exercise from 1981 seems to confirm that is the prevailing mentality in the Kremlin. In fact, Shoygu claimed , “They (the exercises ‘Vostok-2018’) will in some ways recall ‘Zapad-81,’ but in other ways, actually, will be even larger in scale [Они (учения ‘Восток-2018’) в чём-то повторяют ‘Запад-81’, но в чем-то, пожалуй, ещё масштабнее].”

In the same statement, Shoygu declared that the exercise would involve more than one thousand aircraft, almost three hundred thousand soldiers, and nearly all Russian military installations in the Central and Eastern military regions, including also the Northern and Pacific fleets. In the article cited above, moreover, the Russian defense minister is said to have asked gathered journalists to imagine “when 36,000 pieces of equipment including tanks, armored personnel carriers, etc. are simultaneously on the march [когда одновременно на марше находятся примерно 36 тыс. единиц техники, включая танки, БТР, БМП].”

A couple of days later and on the occasion of a visit by a senior Chinese military delegation, Shoygu offered that the relationship between Moscow and Beijing reached “ an unprecedented high level .” Interestingly on that latter occasion, he discussed the participation of Chinese units together with Russian units in the Shanghai Cooperation Organization’s (SCO) anti-terrorism exercises that just took place in Chelyabinsk Oblast, but did not seem to mention the planned cooperation for Vostok-18. And so it does seem that Russia-China military cooperation has genuinely been regularized, with one exercise or exchange following closely upon the next and reaching higher and higher levels of intensity and scope.

Undoubtedly, it is also true that Russia-China strategic cooperation has reached a new stage. As an example of new energy and synergy in their bilateral relationship, Moscow has likely been impressed by Beijing’s willingness to explicitly support the new concept of a “Polar Silk Road”—as a critical part of the larger Belt and Road initiative. For instance, China’s announcement that it will build a nuclear icebreaker ( with likely Russian assistance ) can be viewed as a rather serious commitment to the smooth operation of the revitalized Northern Sea Route (NSR). Indeed, Chinese investment is likely to play a crucial role in activating Russia’s long-held dream of a dynamic maritime corridor that traces along its northern coast, bringing some amount of both prestige and prosperity.

Yet as good as this sounds, it is fair to say that not all Russians are so optimistic, and some have even suggested that Vostok-18 has a double message that is also meant t o warn Beijing. I have recently described in this forum at least one prolific Russian strategist who considers China as the preeminent threat to Russian national security. Indeed, the Russian media seemed to be registering some disquiet last week over the possible setup of a Chinese military base (or training facility) in eastern Afghanistan. Moreover, one analysis concluded that Beijing might station over five hundred soldiers at that facility, but also assessed that the main purpose was to combat terrorism and also soberly concluded that “the Chinese are acting with extreme caution…[китайцы действуют крайне осторожно].”

Oddly, the Chinese may be talking more about Vostok-18 than the Russians, at least so far. A recent discussion in Global Times [环球时报], for example, crowed that 3,200 Chinese soldiers would participate and the contingent would also bring thirty aircraft as well. The article discusses this new development as a partial break with the past, in which Russia-China exercises were previously small-scale [规模比较小]. But it also notes that Vostok-18 is not a joint exercise [联合军演], but rather Chinese participation in a large-scale Russian exercise. The authors note that Western observers tend to have two contrasting interpretations of Russia-China relations: either as dysfunctional or, at the other end of the spectrum, as an already existing alliance. This article suggests both interpretations are off the mark. They emphatically reject the idea of a Russia-China military alliance, noting that it would represent “such a huge blow against global stability [那对全球稳定将带来多么巨大的冲击].” On reflection, this seems to be a rather mature view of multi-polarity and provide some ample food for thought to Western readers. That might be the point, of course.

Turning back to the Kremlin’s motives, a logical reason why the Russian press is comparatively quiet about Vostok-18 could be that the guns and butter debate in Russia is becoming ever more acute. Against the background of significant protests on the sensitive issue of pension reform, the Kremlin may be a little less eager to flex its military muscles. Yet, this attribute seems to be hard-wired into the Russian DNA. As I absorbed Alexander Solzhenitsyn’s masterly August 1914 , a title I found in an obscure second-hand bookstore over the summer, I found it helps give readers some additional historical perspective regarding Russian leaders’ obsession with rooting out military incompetence. How would history have turned out differently if the Czar’s armies had not suffered catastrophic defeat at Tannenberg? Of course, that defeat followed hard upon grave military failures in the disastrous war against Japan.

Would there have ever been a Bolshevik revolution without these military failures a century ago? Perhaps the Russians can be forgiven for exercising the troops.

And what about the vexing Korean problem that has seen very significant backsliding over the last weeks and yet may form the most potent explanation for the creation of this iteration of Vostok-18? There was a possibility not long ago that Kim Jong-un and Moon Jae-in would actually both attend the Eastern Economic Forum in Vladivostok in September. With Xi Jinping and Shinzo Abe also likely in attendance, that could have been a peace-making opportunity of epochal proportions.

Too bad, it seems that both Kim and Moon have most likely opted to pass up this opportunity to hold another inter-Korean summit. While that is also of great importance, it still looks regrettably that all sides have failed to recognize the vital role of personal diplomacy in the emerging multi-polar world, of cross-cutting cleavages, and yet also the imperative to develop solutions that actually conform to existing balances of power. To state the obvious, all the leaders of North East Asia should gather urgently and regularly to try to iron out differences on the most pressing problems, especially denuclearization.

For American negotiators, there must be a realization that neither polite words, nor symbolic (and worthless) gestures, are adequate to accomplish the arduous task at hand . For now, at least, the need for continued forward progress on the vital North Korea issue should form the very highest priority in U.S.-China relations and also actually in U.S.-Russia relations as well.

Image: A Russian serviceman walks past the Buk-1M missile system at the Army-2015 international military forum in Kubinka, outside Moscow, Russia, June 16, 2015. REUTERS/Maxim Shemetov

The West got a fresh jolt from Moscow last week. That’s when Russian defense minister Sergey Shoygu announced that Russian armed forces would hold a military exercise in September, called “Vostok-18” [East-18], on a scale not seen since the early 1980s. If there was any doubt that Russia sees itself in a “New Cold War,” Shoygu’s direct reference to the massive “Zapad” [West] exercise from 1981 seems to confirm that is the prevailing mentality in the Kremlin. In fact, Shoygu claimed , “They (the exercises ‘Vostok-2018’) will in some ways recall ‘Zapad-81,’ but in other ways, actually, will be even larger in scale [Они (учения ‘Восток-2018’) в чём-то повторяют ‘Запад-81’, но в чем-то, пожалуй, ещё масштабнее].”

In the same statement, Shoygu declared that the exercise would involve more than one thousand aircraft, almost three hundred thousand soldiers, and nearly all Russian military installations in the Central and Eastern military regions, including also the Northern and Pacific fleets. In the article cited above, moreover, the Russian defense minister is said to have asked gathered journalists to imagine “when 36,000 pieces of equipment including tanks, armored personnel carriers, etc. are simultaneously on the march [когда одновременно на марше находятся примерно 36 тыс. единиц техники, включая танки, БТР, БМП].”

A couple of days later and on the occasion of a visit by a senior Chinese military delegation, Shoygu offered that the relationship between Moscow and Beijing reached “ an unprecedented high level .” Interestingly on that latter occasion, he discussed the participation of Chinese units together with Russian units in the Shanghai Cooperation Organization’s (SCO) anti-terrorism exercises that just took place in Chelyabinsk Oblast, but did not seem to mention the planned cooperation for Vostok-18. And so it does seem that Russia-China military cooperation has genuinely been regularized, with one exercise or exchange following closely upon the next and reaching higher and higher levels of intensity and scope.

Other than the vast scale of the exercise, the fact that Chinese forces have a role seems to have dominated reporting on Vostok-18. But, at least two critical analytical points seem to have been missed. First, the location of the exercise no doubt reflects the Kremlin’s desire to cool down tensions in the European theater. At a time of emerging fissures within the Trans-Atlantic Community, such an enormous exercise close to NATO countries would be excessively provocative and counter to Russia’s interests. That the Kremlin understands this is no doubt a good thing for European security. The other important point that has not registered in most Western analyses is the confluence of the September Eastern Economic Forum in Vladivostok and the Vostok-18 exercise. It’s easy to forget that six months ago, it looked more than a little likely that a massive war would engulf Northeast Asia. The exercise was most likely put together as a show of force meant to favorably impact diplomacy and the related “correlation of forces” in and around the Korean Peninsula.

Undoubtedly, it is also true that Russia-China strategic cooperation has reached a new stage. As an example of new energy and synergy in their bilateral relationship, Moscow has likely been impressed by Beijing’s willingness to explicitly support the new concept of a “Polar Silk Road”—as a critical part of the larger Belt and Road initiative. For instance, China’s announcement that it will build a nuclear icebreaker ( with likely Russian assistance ) can be viewed as a rather serious commitment to the smooth operation of the revitalized Northern Sea Route (NSR). Indeed, Chinese investment is likely to play a crucial role in activating Russia’s long-held dream of a dynamic maritime corridor that traces along its northern coast, bringing some amount of both prestige and prosperity.

Yet as good as this sounds, it is fair to say that not all Russians are so optimistic, and some have even suggested that Vostok-18 has a double message that is also meant t o warn Beijing. I have recently described in this forum at least one prolific Russian strategist who considers China as the preeminent threat to Russian national security. Indeed, the Russian media seemed to be registering some disquiet last week over the possible setup of a Chinese military base (or training facility) in eastern Afghanistan. Moreover, one analysis concluded that Beijing might station over five hundred soldiers at that facility, but also assessed that the main purpose was to combat terrorism and also soberly concluded that “the Chinese are acting with extreme caution…[китайцы действуют крайне осторожно].”

Oddly, the Chinese may be talking more about Vostok-18 than the Russians, at least so far. A recent discussion in Global Times [环球时报], for example, crowed that 3,200 Chinese soldiers would participate and the contingent would also bring thirty aircraft as well. The article discusses this new development as a partial break with the past, in which Russia-China exercises were previously small-scale [规模比较小]. But it also notes that Vostok-18 is not a joint exercise [联合军演], but rather Chinese participation in a large-scale Russian exercise. The authors note that Western observers tend to have two contrasting interpretations of Russia-China relations: either as dysfunctional or, at the other end of the spectrum, as an already existing alliance. This article suggests both interpretations are off the mark. They emphatically reject the idea of a Russia-China military alliance, noting that it would represent “such a huge blow against global stability [那对全球稳定将带来多么巨大的冲击].” On reflection, this seems to be a rather mature view of multi-polarity and provide some ample food for thought to Western readers. That might be the point, of course.

Turning back to the Kremlin’s motives, a logical reason why the Russian press is comparatively quiet about Vostok-18 could be that the guns and butter debate in Russia is becoming ever more acute. Against the background of significant protests on the sensitive issue of pension reform, the Kremlin may be a little less eager to flex its military muscles. Yet, this attribute seems to be hard-wired into the Russian DNA. As I absorbed Alexander Solzhenitsyn’s masterly August 1914 , a title I found in an obscure second-hand bookstore over the summer, I found it helps give readers some additional historical perspective regarding Russian leaders’ obsession with rooting out military incompetence. How would history have turned out differently if the Czar’s armies had not suffered catastrophic defeat at Tannenberg? Of course, that defeat followed hard upon grave military failures in the disastrous war against Japan.

Would there have ever been a Bolshevik revolution without these military failures a century ago? Perhaps the Russians can be forgiven for exercising the troops.

And what about the vexing Korean problem that has seen very significant backsliding over the last weeks and yet may form the most potent explanation for the creation of this iteration of Vostok-18? There was a possibility not long ago that Kim Jong-un and Moon Jae-in would actually both attend the Eastern Economic Forum in Vladivostok in September. With Xi Jinping and Shinzo Abe also likely in attendance, that could have been a peace-making opportunity of epochal proportions.

Too bad, it seems that both Kim and Moon have most likely opted to pass up this opportunity to hold another inter-Korean summit. While that is also of great importance, it still looks regrettably that all sides have failed to recognize the vital role of personal diplomacy in the emerging multi-polar world, of cross-cutting cleavages, and yet also the imperative to develop solutions that actually conform to existing balances of power. To state the obvious, all the leaders of North East Asia should gather urgently and regularly to try to iron out differences on the most pressing problems, especially denuclearization.

For American negotiators, there must be a realization that neither polite words, nor symbolic (and worthless) gestures, are adequate to accomplish the arduous task at hand . For now, at least, the need for continued forward progress on the vital North Korea issue should form the very highest priority in U.S.-China relations and also actually in U.S.-Russia relations as well.

Lyle J. Goldstein is research professor in the China Maritime Studies Institute at the United States Naval War College in Newport, RI. In addition to Chinese, he also speaks Russian and he is also an affiliate of the new Russia Maritime Studies Institute at Naval War College. You can reach him at goldstel@usnwc.edu. The opinions in his columns are entirely his own and do not reflect the official assessments of the U.S. Navy or any other agency of the U.S. government.

Image: A Russian serviceman walks past the Buk-1M missile system at the Army-2015 international military forum in Kubinka, outside Moscow, Russia, June 16, 2015. REUTERS/Maxim Shemetov

-(1).png)

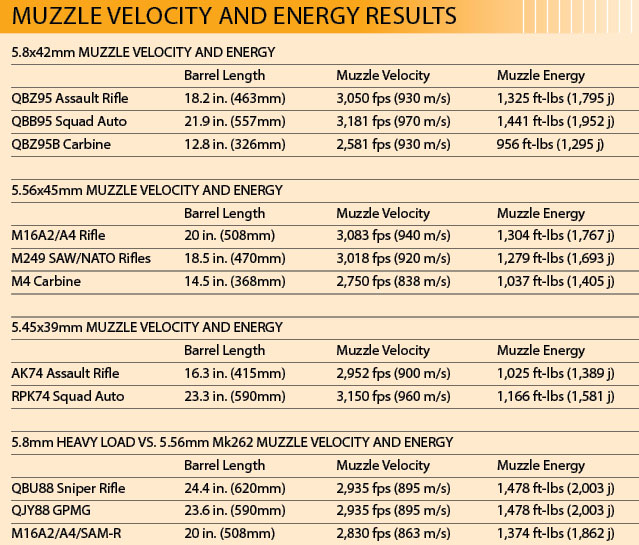

I shot the 5.8mm standard load with the QBZ95 rifle, averaging three-MOA groups at 100 meters. With a shooter more comfortable with the bullpup layout and a proper zero, 2½-MOA or better accuracy should be achievable with the same 5.8mm ammo-and-rifle combination. From my experience in the Marine Corps, the M855/SS109 5.56mm round has an average two-MOA or better accuracy when fired from the M16A2. The newer M16A4 with its heavier and higher-quality barrel is even more accurate.

I shot the 5.8mm standard load with the QBZ95 rifle, averaging three-MOA groups at 100 meters. With a shooter more comfortable with the bullpup layout and a proper zero, 2½-MOA or better accuracy should be achievable with the same 5.8mm ammo-and-rifle combination. From my experience in the Marine Corps, the M855/SS109 5.56mm round has an average two-MOA or better accuracy when fired from the M16A2. The newer M16A4 with its heavier and higher-quality barrel is even more accurate.

Speaker Paul Ryan (WI)

Speaker Paul Ryan (WI) ep. Rob Wittman (VA)

ep. Rob Wittman (VA) Rep. Bob Latta (OH)

Rep. Bob Latta (OH) Rep. Richard Hudson (NC)

Rep. Richard Hudson (NC) Rep. Rob Bishop (UT)

Rep. Rob Bishop (UT) Rep. Jason Chaffetz (UT)

Rep. Jason Chaffetz (UT) Rep. Crescent Hardy (NV)

Rep. Crescent Hardy (NV) Rep. Don Young (AK)

Rep. Don Young (AK) Rep. Bradley Byrne (AL)

Rep. Bradley Byrne (AL) Rep. Tim Walz (MN)

Rep. Tim Walz (MN) Sen. Marco Rubio (FL)

Sen. Marco Rubio (FL) Senator Roy Blunt (MO)

Senator Roy Blunt (MO) Senator Rob Portman (OH)

Senator Rob Portman (OH) Senator Johnny Isakson (GA)

Senator Johnny Isakson (GA) Senator Ron Johnson (WI)

Senator Ron Johnson (WI) Senator Richard Shelby (AL)

Senator Richard Shelby (AL) Senator John Cornyn (TX)

Senator John Cornyn (TX) Senator Chuck Grassley (IA)

Senator Chuck Grassley (IA) Senator Mike Crapo (ID)

Senator Mike Crapo (ID) Senator Kelly Ayotte (NH)

Senator Kelly Ayotte (NH)